Welcome to ZenGuard

Real-Time Trust Layer for AI Agents

We believe that AI Agents are going to change the world. However, the general public still needs to be convinced that AI Agents are safe and secure. ZenGuard's goal is to build trust in AI Agents.

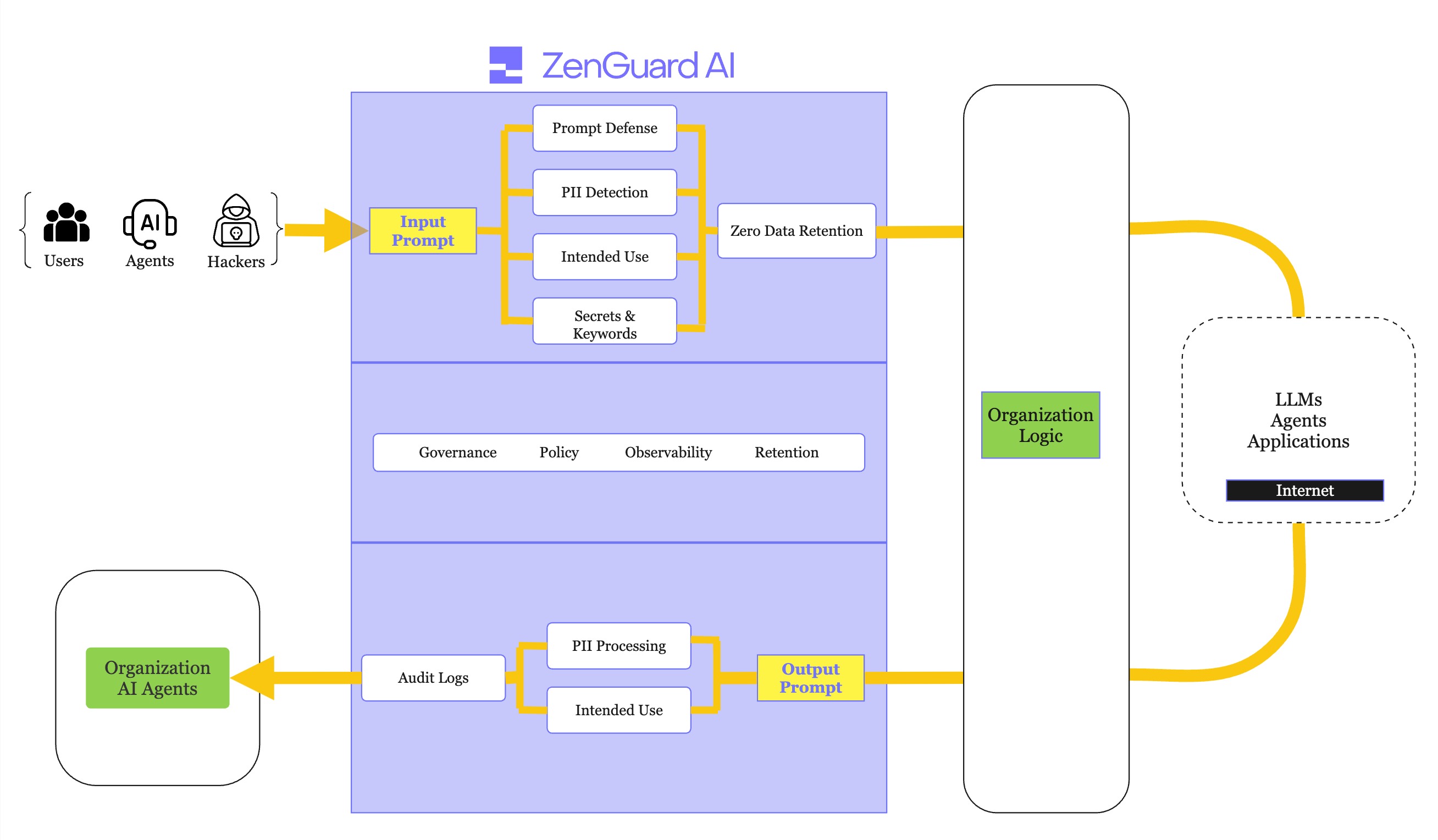

ZenGuard is a real-time trust layer for AI Agents. It protects AI agents during the runtime from prompt attacks, data leakage, and misuse. ZenGuard Trust Layer is built for production and is ready to be deployed in your business to ultimately increaset your company's success in the AI era.

ZenGuard Trust Layer:

- Protects AI agents from prompt attacks, jailbreaks, manipulation, etc.

- Performs sensitive data detection, masking and re-identification.

- Enforces the intended use for AI agents.

- Automatically detects secrets keys and keywords.

- Provides full audit capabilities or Zero-Data Retention policy.

- Is ultra-fast and ready for production.

- Can be called via a single API endpoint.

- Or can be deployed on-premise or within your VPC.

What is a ZenGuard Trust Layer?

ZenGuard Trust Layer is built on top of our continuously evolving threat and privacy intelligence. Our trust layer is powered by hardware-optimized AI models that makes it excellent in both detection and speed making it suitable for real-time AI agent uses cases.

Integration with ZenGuard Trust Layer is done via a single API endpoint that is easy to integrate with any AI agent. The detectors are modular in design and can be enabled/disabled/configured at will.

Detectors

ZenGuard Trust Layer screens AI agent prompts on both input to an LLM and output from an LLM. It detects the following threats and violations:

- Prompt Attacks: Identify and prevent prompt injections, jailbreaks, or manipulations in user inputs or reference materials to safeguard LLM behavior from being overridden.

- PII: Mitigate the risk of exposing sensitive data and Personally Identifiable Information (PII) in user inputs or LLM-generated outputs.

- Intended Use Cases: Make sure that your AI agent is only being used for the intended use cases. For example, if the Agent only supposed to talk about finance, this detector will block any prompts that are not related to finance.

- Secrets: Detect different keys, tokens, and others secrets that are not allowed to be shared.

- Keywords: Detect custom keywords in prompts.

LLM Compatibility

ZenGuard Trust Layer is model-agnostic and works with any proprietary or open-source LLMs such as:

- OpenAI

- Anthropic

- Gemini

- Llama

- Mistral

- DeepSeek

- And many more...

Production Deployments

ZenGuard Trust Layer is ready for production deployments. The deployments available:

- As cloud-hosted API endpoints

- As a self-hosted or hybrid deployment via Docker Compose

- As a Kubernetes service(coming soon)

Learn More

Step-by-step tutorial on how to get started in under 5 minutes.

Dive into different detectors that are available in ZenGuard.

One API to rule them all. Secure input into LLM with a single API endpoint.

Learn how to easily apply ZenGuard for your needs.

Learn how to easily integrate Agentforce with ZenGuard

Join the Community

We are in the unprecednted world of AI Agents! Join us to build trust in AI agents.